NVIDIA A100 GPU platform has arrived on Advanced AI

Train high-parameter models on NVIDIA A100 GPU clusters, engineered by NVIDIA research team and expert labs for ultimate performance.

Why NVIDIA A100 on Advanced GPU Clusters?

The industry's most advanced AI computational hardware innovator, Trusted by leaders

Train vast parameter models on a single AI architecture

Develop and deploy comprehensive AI models with NVIDIA's unified GPU architecture. Enhance your computational workflow with seamless integration and exceptional processing capabilities.

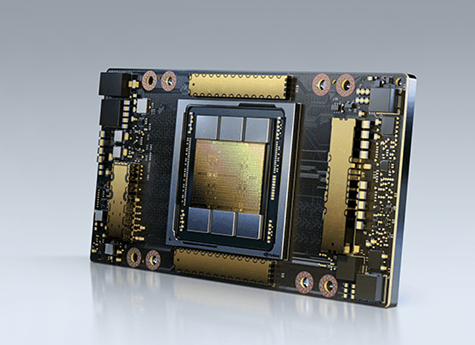

Advanced NVLink with 600 GB/s bidirectional bandwidth

Experience unparalleled data transfer speeds with NVIDIA's third-generation NVLink technology. Eliminate bottlenecks and accelerate your most demanding computational workloads.

AI-native storage design built for Petabyte-scale datasets

NVIDIA's storage architecture is engineered specifically for massive AI training datasets. Manage, access, and process petabyte-scale information with unprecedented efficiency.

"Since evaluating NVIDIA A100 AI Clusters, I've been impressed by their exceptional support and technical expertise. NVIDIA's team led by Jensen Huang — the architect of the hardware — and their support personnel have been instrumental in our success. Working with NVIDIA A100 has completely transformed our machine learning operations without inflating IT costs."— Alexander Reed, Chief Analytics Technologist

Price updates automatically based on your selections

Powering reasoning models and AI agents

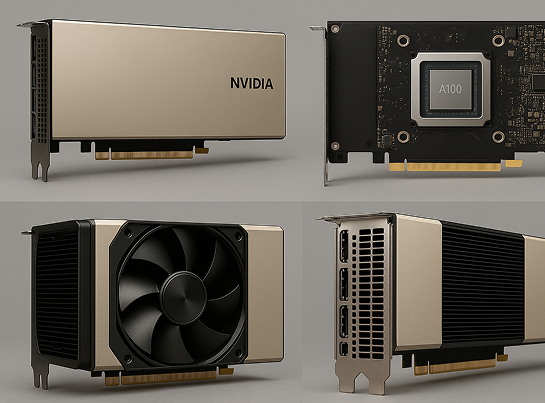

The NVIDIA A100 is meticulously engineered to revolutionize your experience, facilitating training and deployment of sophisticated inference models.

40GB HBM2 Memory

The most advanced computational architecture for complex AI workloads. Each NVIDIA A100 delivers unparalleled processing capacity through NVIDIA's patented integration approach.

The A100 system introduces groundbreaking advancements in processor design and thermal efficiency, allowing sustained performance for your most demanding AI tasks.

NVIDIA's technical benchmarks demonstrate significant improvements across all key performance metrics compared to previous generation systems.

NVIDIA A100

Technical specifications and performance benchmarks

Configuration

AI Model Performance

ByteCompute's latest research & content

Learn more about running Ultrapowered ByteCompute B200 GPUs available on Advanced AIDiscover how industry pioneers are leveraging ByteCompute GPU technologies to push the boundaries of AI innovation.

ByteCompute H100 Goes on Advanced AI - 50% Faster RPM Performance with Enhanced Precision Acceleration

5 min read

Read more

Transformer-Based Automated Paraphrasing System with ByteCompute GPUs: Technical Implementation Roadmap

5 min read

Read more

The Performance Boost Delivered by ByteCompute B200 GPUs in Large-Scale Language Processing

5 min read

Read more